Introduction

Even in today’s age where customers run workloads on managed Kubernetes (K8s) offerings like Azure Kubernetes Service (AKS) and the upgrade process is increasingly automated, most still struggle to keep-up with minor version upgrades. For this reason, companies like Microsoft are now providing an initially designated Long-term Support (LTS) minor version within the current support window, which is currently 1.27 at the time of this writing. During the KubeCon 2023 in Chicago, there was a session hosted by Microsoft and led by Jeremy Rickard focused on the difficulties with K8s upgrades and a desire to understand the reasons. In my experience, here’s some of the reasons I’ve observed on why teams struggle:

Diverse workloads: multiple different workloads and add-on’s running on the (multi-tenant) cluster increase the odds that the new minor version is not compatible.

Operational overhead: Teams often prioritize new feature development or other operational tasks over the routine maintenance work of upgrading.

Fear of Downtime: Due to not being able to rollback, there’s reasonable concerns about potential downtime or disruptions to production workloads can deter teams from performing regular upgrades to maintain reliability.

Keeping Up with Changes: K8s evolves rapidly, and keeping up with the latest best practices, features, and deprecations requires continuous learning.

I’m going to provide guidance in this post on key considerations related to culture, process, and technology in the context of K8s minor version upgrades in AKS.

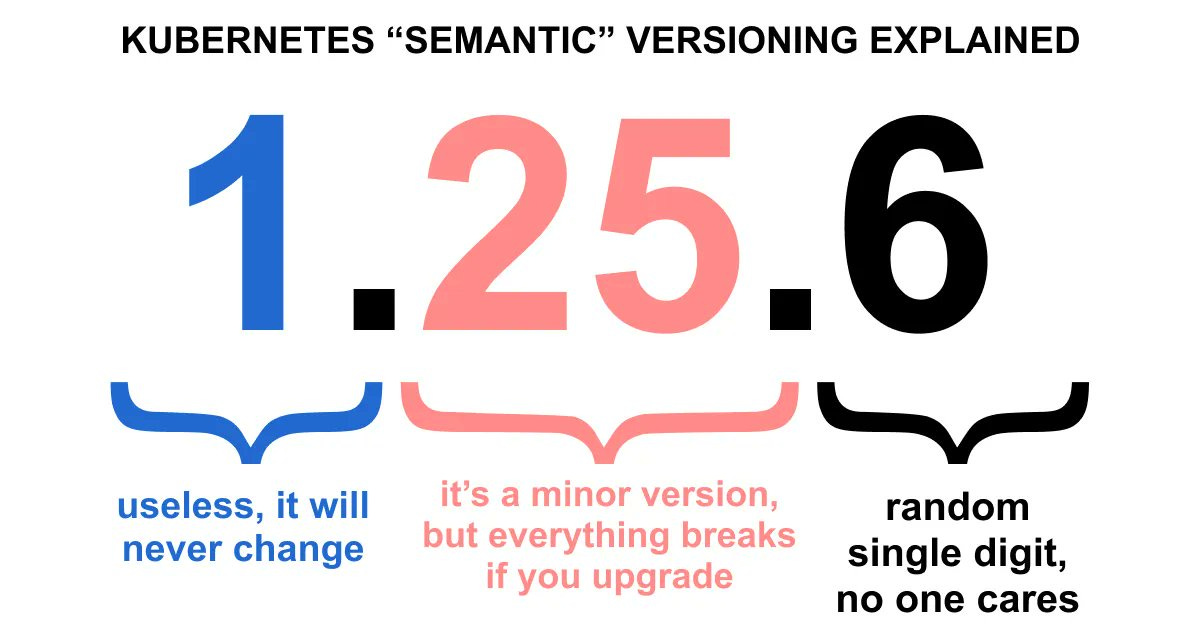

Understanding K8s Versioning

Before we talk about upgrades, we need to understand the versioning scheme in K8s. K8s versions are expressed as x.y.z, where x is the major version, y is the minor version, and z is the patch version, following Semantic Versioning approach. This versioning scheme helps cluster admins and developers plan upgrades by indicating the scope and impact of changes in each release. While major versions, which haven’t been introduced in K8s yet typically introduce breaking changes, it's important to note that minor versions, though generally adding backward-compatible features, may also include API deprecations that could lead to breaking changes. Typically, API deprecations occur on Alpha or Beta API objects. Patch versions, on the other hand, focus on implementing backward-compatible bug fixes, so these can be automated and typically don’t cause issues.

Image: Please note that the image presented here is a satirical take on semantic versioning and should not be taken as an accurate representation of the versioning system.

Reference: Semantic Versioning 2.0.0 | Semantic Versioning

Preparing for an Upgrade

Document K8s Upgrade Process

Given that parts of the upgrade process are manual, documenting the K8s upgrade process is critically even more important for a DevOps or platform team in any organization. The process in the documentation serves to provide consistency and standardization for the purpose of reducing variability and potential errors. Of course, good documentation allows knowledge to be shared among team members and it can aid in the onboarding of new team members. A well-documented process (e.g., wikis, runbooks, checklists, and verification steps) helps in anticipating potential challenges or issues. When some teams manage an AKS cluster using Infrastructure as Code (IaC), they assume documentation is superfluous since IaC is “self-documenting”. But, IaC isn't self-documenting because, while it defines the infrastructure's desired state, it doesn't inherently explain the rationale, context, or specific operational procedures behind its configuration. As you get experienced with K8s upgrades, documentation also provides a channel for feedback on improving the process, especially after post-mortem’s due to an incident. Finally, it’s important to note that maintaining up-to-date documentation is an iterative process that’s ongoing.

Assessing your Current K8s Cluster for Upgrade

Assessing the current environment before a K8s upgrade involves several steps to ensure a smooth transition. First, it's important to determine the existing K8s version. A thorough review of workload compatibility is essential, particularly checking for deprecated APIs and ensuring that K8s manifests and configurations are up to date with the new version. Cluster health and performance metrics should be evaluated to guarantee optimal operation. Dependencies, such as third-party tools, integrations, Helm charts, add-on’s, and custom controllers, must be assessed for compatibility with the new K8s version.

Scanning for API deprecations within a CI/CD pipeline, instead of solely relying on AKS's automatic upgrade halt due to API deprecations, ensures proactive identification of potential issues overtime. This approach allows for the early detection of invalid YAML schemas and deprecated APIs against new K8s minor versions, as well as dependency checks, before deploying changes. Integrating open-source tools like kubeconform or pluto in the CI/CD pipeline can efficiently scan K8s YAML manifests. An example of this is using kustomize below to create K8s resource manifests for an app in a staging environment, and then piping these manifests directly into kubeconform for validation. This method provides a more streamlined and preemptive approach, enabling issues to be resolved early in the development cycle, thus reducing the risk of deployment failures and minimizing downtime during upgrades.

kustomize build apps/my-app/overlays/environments/stage | kubeconform -verbose

stdin - Ingress stage-my-app is valid

stdin - Service stage-my-web-app-service is valid

stdin - Deployment stage-my-app-deployment is validReview Release Notes & Change Log

While AKS alerts on API deprecations now, Cluster Admins should still read release notes and then notify (e.g., email, chat) the development teams on possible impact, especially when using Beta features. Release notes offer a broader view of changes than just API deprecations, including new features, enhancements, bug fixes, and performance improvements. They provide context and additional details about the changes, which can be critical for understanding the impact on your specific environment. Release notes often include information about deprecated features and timelines for their removal, which is vital for future-proofing your deployments.

The release team for each K8s minor version typically hosts a webinar on the CNCF YouTube channel where you could attend to ask questions. If you’re unable to attend, these sessions are recorded. The agenda typically includes major themes, enhancements, and deprecations. At the time of writing, the most recent release is 1.28 and you can access the webinar here: CNCF 1.28 Webinar

Minimize Disruption

Incorporating Pod Disruption Budgets (PDBs) is a strategic approach to minimize downtime during planned maintenance of an AKS cluster. PDBs allow you to define the minimum number of pods that must remain operational for a particular application during voluntary disruptions, such as cluster upgrades or node maintenance. By specifying these budgets, you ensure that Kubernetes respects these limits when evicting pods, thereby maintaining application availability and service continuity. For example, if you have a deployment with 10 replicas of a pod and you can tolerate up to 30% of your pods being unavailable during an upgrade, you can set a PDB that allows a maximum of 3 pods to be disrupted. A typical example of a PDB policy might appear as follows:

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: my-pdb

spec:

maxUnavailable: 30%

selector:

matchLabels:

app: my-appThis way, even during maintenance activities, at least 70% of your pods will remain operational, ensuring that your application continues to serve users with minimal impact. Implementing PDBs is a crucial practice in maintaining high availability and reliability, especially during planned upgrades and maintenance in an AKS environment.

Workload-related Considerations

It's essential to tailor your approach to handling disruptions based on the specific characteristics of your application. For a stateless frontend application, where you aim to maintain at least 90% serving capacity during disruptions, configuring a Pod Disruption Budget (PDB) is relatively straightforward. You can set parameters to ensure no more than a 10% reduction in capacity. Conversely, for a stateful workload with just one replica, where automatic termination might be undesirable, you would typically configure the PDB to allow a maximum of one unavailable instance. In this scenario, manual intervention by a cluster operator for upgrades is often preferable to automate processes.

For more complex, multi-instance stateful applications like ZooKeeper, which rely on maintaining a quorum for proper function, the configuration of the PDB becomes crucial. The PDB needs to be set to a value that ensures a quorum can be achieved and maintained during disruptions. This requires a careful balance to protect the integrity of the application while allowing necessary maintenance and upgrades. This approach ensures both the stability and availability of the application, particularly for systems where high availability and data integrity are paramount.

Upgrade Process

As a disclaimer, when planning an AKS upgrade, it's advisable to first conduct the upgrade in a test and/or staging environment. This approach mitigates risks by identifying potential compatibility issues and assessing performance impacts without affecting live apps. Also, for critical production environments, consider creating a new cluster that mirrors the production setup. This strategy allows for comprehensive testing and a seamless cutover with minimal downtime. It facilitates a zero-impact upgrade by ensuring continuous service availability and easy rollback if needed (given that it’s currently not possible to revert to a prior K8s minor version. This method requires planning, resource allocation for running parallel environments, and careful synchronization until the cutover is complete.

Now, to actually start the upgrade process and determine the available upgrade paths for your cluster, run the following command:

az aks get-upgrades --resource-group myResourceGroup --name myAKSCluster -o table

Name ResourceGroup MasterVersion Upgrades

------- --------------- --------------- -----------------------------

default myResourceGroup 1.27.1 1.27.3,1.27.7,1.28.0,1.28.3The command returns a list of K8s versions to which your AKS cluster can be upgraded. This list includes only the versions compatible with your current setup and supported by Azure. Before initiating the upgrade, you could review the current configuration by running the following command:

az aks show --resource-group myResourceGroup --name myAKSClusterTo identify supported Kubernetes versions in your region for an AKS cluster upgrade, especially if you are on an unsupported minor version and intend to skip several minor versions, execute the following command:

az aks get-versions --location EastUS -o table

KubernetesVersion Upgrades

------------------- ------------------------

1.28.3 None available

1.28.0 1.28.3

1.27.7 1.28.0, 1.28.3

1.27.3 1.27.7, 1.28.0, 1.28.3

1.26.10 1.27.3, 1.27.7

1.26.6 1.26.10, 1.27.3, 1.27.7

1.25.15 1.26.6, 1.26.10

1.25.11 1.25.15, 1.26.6, 1.26.10Having examined the existing setup and identified the available upgrade paths, we're prepared to proceed with the cluster upgrade. The command to initiate this process is as follows:

az aks upgrade --resource-group myResourceGroup --name myAKSCluster --kubernetes-version 1.28 --

verbose > upgrade.log 2>&1The given command performs an AKS cluster upgrade with detailed logging, and it saves the output to a log file. This log can be crucial for diagnosing issues if the upgrade encounters problems. For instance, when I attempted an upgrade, the log file revealed that it failed because the agent nodepool was still on version 1.26.6, while I was trying to upgrade to Kubernetes version 1.28. This discrepancy led to the upgrade failure, as skipping versions in agent nodepools isn't allowed. Additionally, I haven't discussed much about updating node images, which is also an important aspect to consider in this context.

ERROR: (AgentPoolUpgradeVersionNotAllowed) Upgrade agent pool version from 1.26.6 to 1.28.3 is not allowed, you can only upgrade one minor version at a time. Please use [az aks get-upgrades] command to get the supported upgrade paths. For more information, please check https://aka.ms/aks/UpgradeVersionRules Code: AgentPoolUpgradeVersionNotAllowed Message: Upgrade agent pool version from 1.26.6 to 1.28.3 is not allowed, you can only upgrade one minor version at a time. Please use [az aks get-upgrades] command to get the supported upgrade paths. For more information, please check https://aka.ms/aks/UpgradeVersionRules

In AKS, you can upgrade only the Control Plane, including the K8s minor version, while the nodepool version might remain outdated. To address this and align the K8s minor version with the nodepool version in my cluster, I executed the command below to upgrade to version 1.27.7. This process also included updating the nodepool, which is the default action when using the `--yes` flag.

~ az aks upgrade --resource-group myResourceGroup --name myAKSCluster --kubernetes-version 1.27.7 --yes --verbose > upgrade.log 2>&1Ultimately, to achieve my goal of upgrading to version 1.28, I executed the same command once more. Following this upgrade, the cluster reached the patch version .3, making the complete version 1.28.3. It's important to note that if a specific patch version isn't explicitly stated, the system defaults to the most recent patch release.

az aks upgrade --resource-group myResourceGroup --name myAKSCluster --kubernetes-version 1.28 --

verbose > upgrade.log 2>&1While I'm utilizing the Azure CLI here primarily for demonstration to understand the upgrade process, it's possible to use an Infrastructure as Code (IaC) tool like Terraform for provisioning, managing, and upgrading your cluster. This approach also allows you to maintain a version-controlled record of all changes. Further, if you’re running a lot of AKS clusters, you can use AKS Fleet Manager to orchestrate K8s version and node image upgrades across multiple clusters by using update runs, stages, and groups. For more information, please see: Fleet Manager

Post-Upgrade Review

After upgrading your Azure Kubernetes Service (AKS) cluster, it’s important to verify that the upgrade was successful. You can perform several checks using the Azure CLI and other tools to ensure the cluster and resources are functioning correctly. Here's a sample review process:

Verify K8s Minor Version

az aks show --resource-group myResourceGroup --name myAKSCluster --query kubernetesVersion

"1.28"Verify Node Version

kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-31636152-vmss000009 Ready agent 34m v1.28.3Check recent events for warnings or errors

kubectl get events --all-namespaces --sort-by='.lastTimestamp'

NAMESPACE LAST SEEN TYPE REASON

default 57m Normal Preempted

...Confirm all the pod containers are running

~ kubectl get pods --all-namespaces

NAMESPACE NAME READY

kube-system ama-logs-jwz6j 3/3

kube-system ama-logs-rs-7c65b759df-xkqtv 2/2

kube-system ama-metrics-5dc7c45886-9m8zw 2/2

...Inspect resource utilization on nodes and pods

kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

aks-systempool-20023331-vmss000000 117m 3% 2421Mi 45%

kubectl top pods --all-namespaces

NAMESPACE NAME CPU(cores) MEMORY(bytes)

default nginx-deployment-66f8758855-5t6j5 0m 1Mi

default nginx-deployment-66f8758855-jm5vc 0m 1MiInspect logs for pod containers (if there’s restarts or errors)

kubectl logs <pod-name> -n <namespace>Verifying and validating an AKS cluster after an upgrade is essential to quickly identify and address any emerging issues. This process can be automated in a CI/CD pipeline, which could involve running kubectl and az CLI commands, ensures that the cluster and its nodes are functioning correctly, maintains app continuity, and checks for resource utilization and compatibility with applications. It also helps in preserving the cluster's compliance with security and operational standards. Such validation post-upgrade is key to maintaining confidence in the stability and reliability of your K8s infrastructure.

Best Practices for Ongoing Maintenance

Upgrading and Maintenance Strategy

To effectively maintain and upgrade an AKS cluster, it's essential to have a maintenance and upgrade strategy. This involves establishing a Planned Maintenance schedule policy, which specifies specific time windows for conducting maintenance tasks. Regularly updating the cluster is important for getting the latest features and security enhancements. For upgrades, testing in a parity staging environment is important. This step ensures that any potential issues are identified and resolved without impacting the production environment. Such preemptive testing is key to maintaining service continuity and avoiding unforeseen complications.

Governance

Regularly updating the cluster's security configurations, such as network policies and access controls, is critical in safeguarding against evolving threats. Utilizing built-in Azure Policy definitions can further enhance security and compliance. These policies help enforce consistency and audit clusters for necessary upgrades, thus mitigating vulnerabilities associated with outdated Kubernetes versions. Here’s an excerpt from the full Azure Policy available for AKS clusters. It focuses on identifying clusters running potentially vulnerable Kubernetes versions, specifically those below 1.13.5. The policy implements an audit action detect whenever such versions are found:

"policyRule": {

"if": {

"allOf": [

{

"field": "type",

"equals": "Microsoft.ContainerService/managedClusters"

},

{

"anyOf": [

{

"field": "Microsoft.ContainerService/managedClusters/kubernetesVersion",

"in": [

"1.13.4",

"1.13.3",

"1.13.2",

"1.13.1",

"1.13.0"

]

},Conclusion

In summary, effectively managing K8s version upgrades in AKS is essential for security, performance, and leveraging new features. It involves staying updated on releases, thorough testing in staging, and using Azure and possibly open-source tools for automation and maintaining compliance. Practices such as regular monitoring, backups, and task automation are key to a seamless upgrade process. A strategic approach to AKS upgrades ensures secure, updated, and efficiently managed clusters, crucial for the reliability and success of app deployments on AKS.