Network Plugins in Azure Kubernetes Service (AKS)

Choosing the Right Plugin: Understanding the Pros/Cons

Introduction

When creating an Azure Kubernetes Service (AKS) cluster, there are two possible configuration options for network plugins. If the two options don’t satisfy your workload requirements, it’s also possible to Bring Your Own Container Networking Interface (or BYOCNI), but it’s important to remember that Microsoft does not support or take responsibility for CNI-related issues. The network plugin is used by the Container Runtime on each node to implement the Kubernetes network model. If the network plugin is not explicitly specified at cluster creation, AKS defaults to using the Kubenet plugin, but if the workload requires the Azure Container Networking Interface (CNI) option, it will need to be explicitly specified at cluster creation. So, this implies that changing the network plugin in AKS requires the cluster to be rebuilt at this time, so it’s important to choose the right network plugin upfront.

Kubernetes Network Model

A Kubernetes cluster consists of a set of nodes that exist on a network. Pods run on these nodes and get their own IP addresses. The network model describes how those pod IPs integrate with the larger network. To implement the Kubernetes network model, the following two fundamental requirements need to be satisfied:

Pods on a node can communicate with all pods on all nodes without Network Address Translation (NAT).

Agents on a node (e.g., system daemons, Kubelet) can communicate with all pods on that node.

Network Plugin Overview

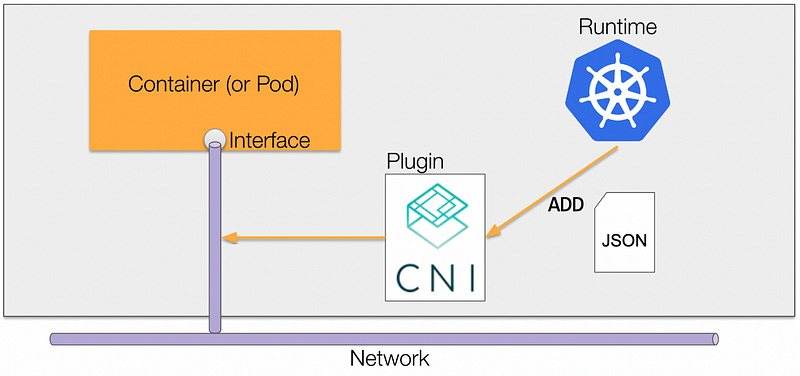

A network plugin, such as the Azure CNI, is required to implement the Kubernetes network model. As illustrated in Figure 1 above, it works in the following way:

Pod begins without a network interface.

Container Runtime makes an API call to CNI.

CNI plugin has a set of verbs, such ADD or DEL, to add or delete a network interface to/from a pod, respectively.

With the Azure CNI plugin in particular, the pod then gets assigned an IP address from a subnet CIDR.

Kubenet

The Kubenet is the default (basic) network plugin configuration in AKS. Unlike the Azure CNI which will be covered next, nodes and pods are assigned an IP address from two distinct address spaces. Nodes receive an IP address from a VNet subnet whereas pods receive an IP address from a logically distinct address space that can be explicitly configured. If pods and nodes use two different IP address ranges, pods can’t communicate across nodes without the configuration of user-defined routing (UDR) and IP forwarding. To verify that the AKS cluster is using the Kubenet network plugin and IP address ranges, run the following AZ CLI command below.

az aks show -n aks-demo -g k8s-demo-rg --query networkProfile -o json

{

"dnsServiceIp": "10.0.0.10",

"dockerBridgeCidr": "172.17.0.1/16",

"networkPlugin": "kubenet",

"networkPolicy": null,

"podCidr": "178.16.28.1/24",

"serviceCidr": "10.0.0.0/16"

}Since pods can be assigned IP addresses range from a dedicated podCidr, the primary benefit of the Kubenet plugin is the unlikelihood of IP address exhaustion (relative to Azure CNI). However, given the Kubenet plugin is a basic network plugin, some of the primary drawbacks of using Kubenet include:

Overhead from managing user-defined routes (UDR)

Lack of support for advanced features like Network Policy or virtual nodes

Does not support scaling above 400 nodes for the AKS cluster.

Performance overhead due to additional NATing

Azure Container Networking Interface (CNI)

The Azure CNI facilitates the assignment of IP addresses to all pods from the address range of the subnet. These are private IPv4 addresses (from the RFC 1918 range) that must not overlap with other addresses from the Azure Virtual Networks (VNets) or other connected networks’ address space.

With Azure CNI, every pod gets an IP address from the subnet and can be accessed directly. These IP addresses must be unique across your network space and must be planned in advance. Each node has a configuration parameter for the maximum number of pods that it supports. The equivalent number of IP addresses per node are then reserved up front for that node. This approach requires more planning, and often leads to IP address exhaustion or the need to rebuild clusters in a larger subnet as your application demands grow.

To confirm the network plugin of an existing AKS cluster, the output of the AZ CLI command below for NetworkPlugin is Azure CNI.

az aks show -n $AKS_NAME -g $AKS_RG --query "{NetworkPlugin:networkProfile.networkPlugin}" -o table

NetworkPlugin

---------------

azureWith the Azure CNI, pods and nodes obtain their IP addresses from the subnet in the VNet where the cluster nodes are deployed. The AZ CLI command below returns the AddressPrefix in CIDR format for the subnets.

az network vnet subnet list --resource-group $VNET_RG --vnet-name $VNET_NAME --query "[].addressPrefix" -o table

Result

-------------

10.110.0.0/22Now let’s confirm the IP addresses of the cluster nodes and pods using the kubectl client.

kubectl get nodes -o='custom columns=NodeName:.metadata.name,IP:.status.addresses[0].address'

NodeName IP

aks-nodepool1-19921692-vmss000004 10.110.0.4

aks-nodepool1-19921692-vmss000005 10.110.0.33

kubectl get pods -o='custom columns=PodName:.metadata.name,IP:.status.podIP'

PodName IP

test-8499f4f74-f6lck 10.110.0.50

test-8499f4f74-f7676 10.110.0.17

test-8499f4f74-h69tg 10.110.0.53

test-8499f4f74-wwmng 10.110.0.13Note that the IP addresses assigned to both the nodes and pods are within the subnets CIDR range of 10.110.0.0/22. In particular, every node is configured with a primary IP address. By default, 30 additional IP addresses are pre-configured by Azure CNI that are assigned to pods scheduled on the node. When you scale out your cluster, each node is similarly configured with IP addresses from the subnet. There’s a Kubelet flag that enforces the maximum number of pods that can be scheduled on a node, and that value can be confirmed with the following command.

kubectl get nodes aks-nodepool1-19921692-vmss00000a -o jsonpath='{.status.allocatable.pods}'

30The 30 IP addresses that are assigned to a node by default is configurable up to 250 at deployment time. So, depending on the workload density, it’s important to plan ahead the expected IP address usage. Further, when upgrading or scaling the cluster, don’t forget to take into account a reserve of available IP addresses when upgrading the cluster, rolling updates, or when horizontally scaling the number of nodes or pod replicas.

Dynamic IP Allocation

As previously mentioned, one disadvantage of the conventional CNI is that as the AKS cluster expands, the pool of pod IP addresses gets depleted, necessitating the rebuilding of the entire cluster in a larger subnet. This issue is resolved by Azure CNI’s latest feature of dynamic IP allocation which assigns pod IP addresses from a subnet that is distinct from the one where the AKS cluster is located. The feature makes IP addressing planning less complicated. As nodes and pods can be scaled independently, their address spaces can also be designed separately.

It is possible to configure pod subnets down to the level of a node pool, enabling the addition of a new subnet when a node pool is added. However, it is important to consider that system pods in a cluster/node pool also receive IPs from the pod subnet. The deployment parameters needed to set up the Azure CNI networking in AKS are mostly unchanged, but there are two exceptions to be aware of. Firstly, the “subnet” parameter now refers to the subnet that is associated with the AKS cluster’s nodes. Secondly, there is a new parameter called “pod subnet” which should be used to specify the subnet that will provide IP addresses to pods through dynamic allocation.

In the example below, to create the cluster, you should reference the node subnet by using the flag “--vnet-subnet-id”, and specify the pod subnet by using the flag “--pod-subnet-id”.

clusterName="myAKSCluster"

subscription="aaaa-aaaaa-aaaa-aaaaa"

az aks create -n $clusterName -g $resourceGroup -l $location \

--max-pods 250 \

--node-count 2 \

--network-plugin azure \

--vnet-subnet-id /subscriptions/$subscription/resourceGroups/$resourceGroup/providers/Microsoft.Network/virtualNetworks/$vnet/subnets/nodesubnet \

--pod-subnet-id /subscriptions/$subscription/resourceGroups/$resourceGroup/providers/Microsoft.Network/virtualNetworks/$vnet/subnets/podsubnetAzure CNI Overlay

The Azure CNI Overlay is the final feature in AKS that helps alleviate IPv4 address exhaustion. It allows for the deployment of cluster nodes to a subnet within an Azure Virtual Network (VNet), while pods are assigned IP addresses from a separate private CIDR. An overlay network is utilized for communication between pods and nodes within the cluster, and Network Address Translation (NAT) is used to access resources outside the cluster. This feature conserves VNet IP addresses, provides seamless scalability for larger clusters, and enables reuse of the private CIDR in different AKS clusters, allowing for greater IP space for containerized applications.

In overlay networking, cluster nodes are allocated IP addresses from a subnet within the Azure VNet, while pods are assigned IP addresses from a distinct, private CIDR range that is separate from the VNet's address space. Each node in the cluster is given a slice of the overall pod CIDR, often a /24 range, to assign to its pods. But, these pod IP addresses do not come directly from the node's /24 space but are instead part of the larger, cluster-wide pod CIDR. The overlay network facilitates direct pod-to-pod communication by encapsulating and routing pod traffic over the node IPs, creating an overlay atop the existing VNet. This method enables pods to communicate across nodes without the need for additional custom routes in the VNet, providing a connectivity experience that is similar to VMs within the same VNet. When you provision an AKS cluster with the Azure CNI network plugin in overlay mode, here’s what it looks like:

Get Cluster Info

az aks show -n $myAKSCluster -g $myResourceGroup --query "networkProfile"

{

"networkMode": "overlay",

"networkPlugin": "azure",

"podCidr": "10.244.0.0/16",

...

}Check Node IP

kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP

aks-nodepool1-18821690-0 Ready agent 10d v1.25.7 10.240.0.4

...

Check Pod IP

kubectl get pods --all-namespaces -o=custom-columns=NAME:.metadata.name,POD_IP:.status.podIP,NODE:.spec.nodeName

NAME POD_IP NODE

my-app 10.244.0.2 aks-nodepool1-18821690-0Learn more here: https://github.com/hariscats/AksNetworkingDemo

Summary

In Kubernetes, network plugins are essential for implementing the platform's networking model, and a variety of options, both open-source and proprietary, are available to suit different needs. For AKS deployments, Azure CNI in Overlay mode is typically recommended. It simplifies IP management, enhances scalability, and offers performance comparable to Azure VMs within the same VNet. However, the choice of network plugin should be based on specific workload requirements, operational considerations, and compatibility with existing infrastructure. While Azure CNI is well-suited for large-scale and seamlessly integrated AKS deployments, alternatives like Calico or Flannel might be more appropriate in certain scenarios. For comprehensive guidance, check out the official Kubernetes and Azure documentation on network plugins and their configuration in AKS for more detail.